Cyborgs are usually depicted as human-machine hybrids in popular fiction. They’ll either be robots with human brains or people who’ve had some of their limbs replaced with machine parts. That’s pretty cool. But, let’s be honest: those are your grandparent’s cyborgs. Ours are better.

In the modern world, the idea of putting a computer chip in your brain isn’t far-fetched at all. And robotic limbs are quickly becoming a reality. But neither of these cybernetic solutions are intended to augment healthy people.

For non-medical cybernetic applications, the ultimate goal of human-machine hybrids should be optimization. It might be super cool to have robotic arms that can bend steel but, arguably, there are better ways to accomplish such feats than to graft metal to your flesh.

A fork in the road

We talked to 4 successful entrepreneurs

This is what they wish they knew before getting started

Experts argue that the advent of always-on internet connectivity and technologies such as Google Search, Wikipedia, and YouTube have created an entirely new species of human.

Rather than a physiological evolution, such as those our ancestors went through on their way out of the oceans, into the trees, and ultimately walking upright, we’re undergoing a technology-induced separation of the species.

Arguably, homo-sapiens are a critically endangered species. Most humans interface with the internet via smart phones or computers on a daily basis. Eventually, nearly every person on Earth will have always-on connectivity to the internet via a personal device.

And, when that happens, homo-sapiens will become something else. Homo-sapien 2.0 perhaps. Or maybe something else entirely, it’s quite conceivable we’ll experience physiological changes to our brains as we continue to integrate our mental space with machines.

Less traveled

Many humans are already cyborgs. If you take everything that’s cool about RoboCop and then say “what if we made it wireless instead,” what you get is a cop with an iPhone and a smart gun.

This is because the modern definition of cyborg includes the idea of using computers as an extension of our own minds.

Think about it: how many phone numbers do you have memorized? Probably not as many as you would have in the 1990s.

For most of us, this is because of we’ve allowed our technology to ease our mental burdens. Why memorize phone numbers when our phones can do it for us?

As Slate’s Will Oremus pointed out back in 2018, this is actually by design:

Google is now beckoning you to accept its software as part of your extended mind, in all kinds of new ways. It promises to think for you, speak for you, and carry out actions in the real world on your behalf.

More traveled

One need look no further than social media to see a clear depiction of both cyborgs and old school homo-sapiens interacting with one another.

When we tell people to “Google it,” we’re asking them to use their readily available cybernetic augmentations to access the hive mind in order to save time. We’re trying to optimize the human race. Because we’re cyborgs.

However, not everyone is a cyborg. Homo-sapiens often choose human interaction over a Google Search because they’re genuinely curious about other people’s opinions.

Perhaps they’re not interested in feeling like a cloud-connected, boring version of RoboCop who summons his Tesla with an app and controls his home via Amazon Alexa.

The future

Only future historians will have the proper perspective by which to declare the beginning of the cyborg era.

But we’re going to go ahead and say that it’s already begun.

Googling celebrity birthdays and using a calculator app might not make you feel like a badass cyborg, but the sheer amount of information available to humans in the modern world is unfathomable.

You could live a thousand lifetimes and never learn everything there is to know on the internet. And that makes being able to find what you’re looking for on the internet quickly a life skill that will likely only grow in importance.

Those of us capable of embracing our technology to the fullest, who commit to the cyborg paradigm, stand to gain the most. We’ll be always-connected, cutting-edge, and able to stay a step ahead of the unevolved homo-sapiens.

At least until the power goes out.

At the end of the day, you don’t have to purchase property in the metaverse or own NFT collectibles to embrace modern technology.

You’re a cyborg if you rely on personal technology to do your light work so you can free up your beautiful organic brain for more important thiYou either log off ashamed of your own face, or you stay on Zoom long enough to become a narcissist.

Those are the options being presented by researchers, pundits, and a host of other people who started writing about COVID-19 and working from home a few years back under the pretense things would be back to normal by Christmas… of 2020.

It’s 2022 now and we’re hitting a fever pitch when it comes to pontificating on the finer nuances of human psychology through the microscopic lens of a web cam.

Mark your calendar for June 16 & 17!

Tickets to TNW 2022 are available now!

“Not everybody hates looking at themselves on Zoom” proclaims WSU Insider’s Sara Zaske.

Their article references recent research from Washington State University associate professor Kristine Kuhn. In a survey of more than 80 workers who’d been displaced from the office and forced to work remotely due to COVID-19, Kuhn found that some folks didn’t like seeing themselves on camera and others did.

Per the research:

Two studies of people attending regular virtual meetings, one conducted with newly remote employees from a variety of organizations and one with business students shifted to remote learning, test this assumption. In both studies, the association between frequency of self-view during meetings and aversion to virtual meetings was contingent on a dispositional trait: the user’s degree of public self-consciousness.

Kuhn’s work recognizes that individual results may vary, but it’s interesting to note the general divide.

Whether or not you’re comfortable on a Zoom call isn’t necessarily a good indicator of employee value or productivity.

If you, for example, are a news anchor, it might be a big deal. But most of us should reasonably expect to be able to retain gainful employment whether we’re good on camera or not.

However, we don’t live in a perfect world. People who thrive in front of their own digital visage can potentially get a leg up on those of us who’d rather not be forced to make eye contact with themselves.

And, worse, people who experience genuine anxiety at the prospect of spending time on camera every day can find themselves somewhat ostracized as society pummels toward the complete normalization of the idea that being employed means being monitored on camera.

Luckily, the big silly zeitgeist of 2022 is certain to be the metaverse. If you’re wondering what the metaverse is, it’s whatever any given company’s marketing team says it is.

Here’s the only important thing you really need to know about the metaverse right now: it won’t be a VR-only experience. A small percentage of the population simply cannot experience VR without getting sick.

And, unless you work in the VR sector, that would be arbitrary and purposeless discrimination.

The metaverse will have VR components, but the big sell here is that the metaverse is its own complete “thing” unto itself. So, you probably wouldn’t log in to the VR metaverse and then log out of it to go on a videoconferencing call.

The future that big tech sees for all of us is one wherein your presence in the metaverse is as permanent and unique as you are. If you buy an NFT hat for your avatar to wear, it’ll be there in VR. It’ll also automatically update to your verified social media accounts and all your connected web profiles. You don’t go to the metaverse, it’s just there.

This means there’s going to be a huge impetus for companies such as Meta (formerly Facebook) and Google to integrate metaverse concepts into their business software. In other words: Google Meet is probably going to go to great lengths to incorporate some form of metaverse avatar integration right there in the app. Gmail will probably have metaverse integration. Metaverse all the things!

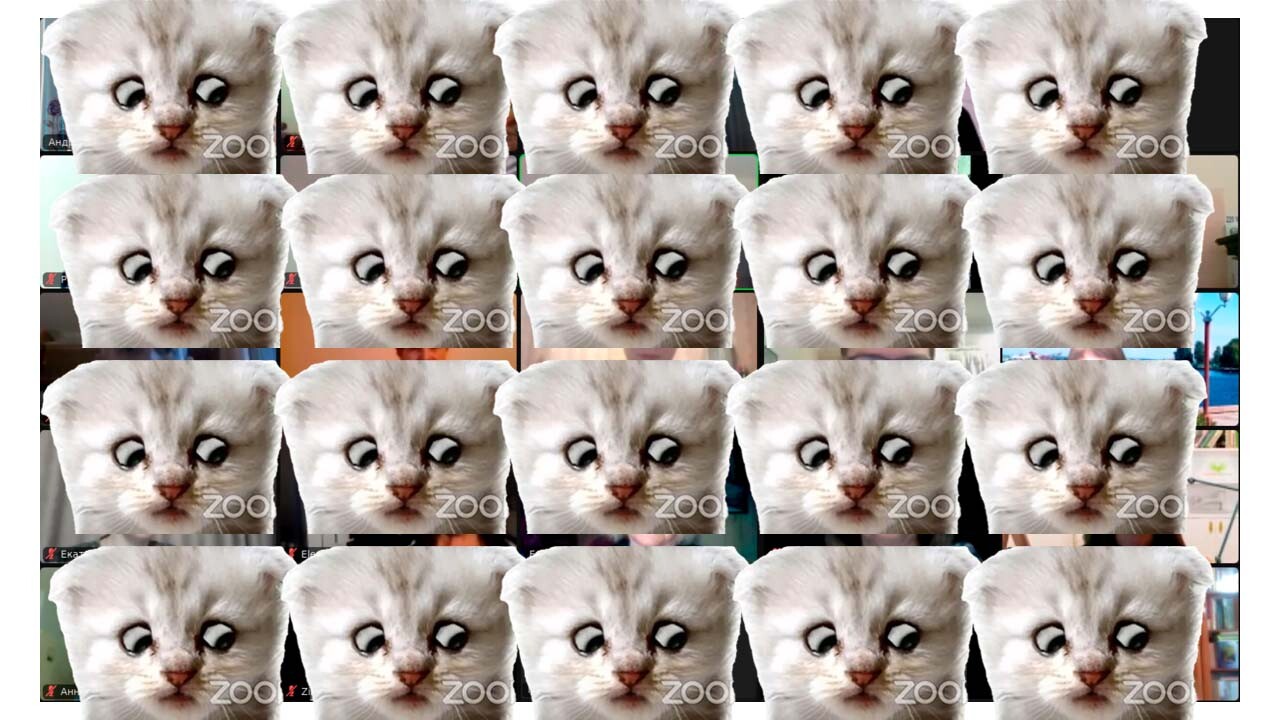

Sure, we can do stuff like that already. We’ve all seen the hilarious videos of important people accidentally turning themselves into wacky avatars. But the metaverse threatens to do for silly things like cartoon avatars and paying real money for fake hats what the internet and Facebook did for self-photography and non-traditional media enterprises. It’ll legitimize, normalize, and make them profitable.

Why? Because there’s gold in them thar hills! What’s the point of convincing a tiny portion of the population to buy really expensive NFTs when you can convince a giant portion of the population that it’s just as important to have a nice shirt on your avatar as it is to shower before you physically go to the office.

No matter how you feel about the metaverse, digital shirts, or big tech, it’s important to keep the big picture in mind: if these predictions come true, I’ll never have to be on cam again.

I can roll out of bed, click a button to dress up my avatar in a business suit, and look presentable for a meeting with my company’s CEO. Meanwhile, in reality, I look like someone who stuck their finger in a light socket and I’m wearing footie pajamas.